Eight episodes per season is still not enough for such a series, the story did not have time to really develop, but it's still interesting what will happen next, which is good. Especially since a second season has been confirmed. Although the final episode was pretty boring.

Pide Piper's team took advantage of Ehrlich's bruises during the presentation. Firstly, the conference management, frightened by a possible lawsuit (the lawyer-guitarist called), offered Pide Piper to go to the next tour without any competition, and secondly, Erlich also bought them a suite in a hotel.

Erlich, though very stuffy, but there are benefits from it. The team should have such a person - arrogant as a tractor, punchy, smug optimist, who always has a stupid idea at the ready and can punch a nasty little boy. And not everyone has the guts to do that.

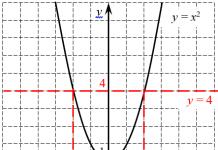

Everything seems to be fine, but the startup’s leaders went to see Belson’s presentation, and he presented not only a large-scale project with a bunch of different functionality, since Houli has a lot of services that can be integrated, but also a certain Weissman coefficient, that is, the compression ratio, He has the same one as the Pyde Piper. The Weissman Coefficient was created specifically for the series by two Stanford consultants Weissman and Misra.

In general, it turns out that nasty competitors nevertheless ruined Richard's algorithm through reverse engineering. Pyde Piper has nothing to show tomorrow.

Erlich tried to troll Belson, accusing him of all mortal sins from alcoholism to sexual harassment, Jared went crazy, and Dinesh and Gilfoyle tried to find themselves a new job.

By the evening, when Jared was released from the police, everyone gathered at the hotel and began to think what to do. Nobody wants to expose themselves to public execution tomorrow, except for Erlich, of course, who believes that public executions are very popular and, in general, all this is show business. Either way, he's going to win, even if he personally has to jerk off every dude in the room. This idea was received with a bang, because, as I wrote recently, programmers can be carried away by any task and they do not care if it is malicious or stupid. While they were calculating under what conditions Erlich would jerk everyone off in the shortest possible time, Richard had an idea.

![]()

No, this is not Richard's idea,

This is Pyde Piper's team solving the Erlich problem.

As you can guess, everything ended well and Pyde Piper received 50 thousand dollars. And Peter Gregory told them he wasn't upset.

My biggest regret is that we won't see Peter Gregory again. It was the best character ever. I don't know if Judge Pyde can find another investor for Piper who's just as crazy.

The compression ratio is the main characteristic of the compression algorithm. It is defined as the ratio of the volume of the original uncompressed data to the volume of compressed data, that is: , where k- compression ratio, S o is the amount of initial data, and S c - volume of compressed files. Thus, the higher the compression ratio, the more efficient the algorithm. It should be noted:

if k= 1, then the algorithm does not perform compression, that is, the output message is equal in volume to the input;

if k< 1, то алгоритм порождает сообщение большего размера, нежели несжатое, то есть, совершает «вредную» работу.

Situation with k< 1 вполне возможна при сжатии. Принципиально невозможно получить алгоритм сжатия без потерь, который при любых данных образовывал бы на выходе данные меньшей или равной длины. Обоснование этого факта заключается в том, что поскольку число различных сообщений длинойn bit is exactly 2 n, the number of different messages with length less than or equal to n(if there is at least one message of smaller length) will be less than 2 n. This means that it is impossible to unambiguously map all original messages to a compressed one: either some original messages will not have a compressed representation, or several original messages will have the same compressed representation, which means they cannot be distinguished. However, even when the compression algorithm increases the size of the original data, it is easy to ensure that their size is guaranteed not to increase by more than 1 bit. Then, even in the worst case, there will be an inequality: This is done as follows: if the amount of compressed data is less than the amount of the original, we return the compressed data by adding “1” to them, otherwise we return the original data by adding “0” to them). An example of how this is implemented in pseudo-C++ is shown below:

bin_data_t __compess(bin_data_t input) // bin_data_t is a data type meaning an arbitrary sequence of bits of variable length

bin_data_t output = arch(input); // function bin_data_t arch(bin_data_t input) implements some data compression algorithm

if (output.size() output.add_begin(1); // function bin_data_t::add_begin(bool __bit__) adds a bit equal to __bit__ to the beginning of the sequence return output; // return the compressed sequence with "1" added else // otherwise (if the amount of compressed data is greater than or equal to the amount of the original) input.add_begin(0); // add "0" to the original sequence return input; // return the original file with "0" appended The compression ratio can be either constant (some algorithms for compressing sound, images, etc., such as A-law, μ-law, ADPCM, truncated block coding) or variable. In the second case, it can be determined either for each specific message, or evaluated according to some criteria: average (usually for some test data set); maximum (the case of the best compression); minimal (worst compression case); or any other. In this case, the lossy compression ratio strongly depends on the permissible compression error or quality, which usually acts as an algorithm parameter. In the general case, only lossy data compression methods can provide a constant compression ratio. The main criterion for distinguishing between compression algorithms is the presence or absence of losses described above. In the general case, lossless compression algorithms are universal in the sense that their use is certainly possible for data of any type, while the possibility of using lossy compression must be justified. For some data types, distortions are not allowed in principle. Among them symbolic data, the change of which inevitably leads to a change in their semantics: programs and their source texts, binary arrays, etc.; vital data, changes in which can lead to critical errors: for example, obtained from medical measuring equipment or control devices of aircraft, spacecraft, etc.; intermediate data repeatedly subjected to compression and recovery during multi-stage processing of graphic, sound and video data. At the heart of any method of information compression is the information source model, or, more specifically, the redundancy model. In other words, to compress information, some information is used about what kind of information is being compressed - without having any information about the information, you cannot make any assumptions about what transformation will reduce the size of the message. This information is used in the compression and decompression process. The redundancy model can also be built or parameterized during the compression step. Methods that allow changing the information redundancy model based on input data are called adaptive. Non-adaptive are usually highly specific algorithms used to work with well-defined and invariable characteristics. The vast majority of sufficiently universal algorithms are adaptive to some extent. Any method of information compression includes two transformations inverse to each other: The compression transformation provides a compressed message from the original. Decompressing, on the other hand, ensures that the original message (or its approximation) is obtained from the compressed one. All compression methods are divided into two main classes The cardinal difference between them is that lossless compression provides the ability to accurately restore the original message. Lossy compression allows you to get only some approximation of the original message, that is, different from the original, but within some predetermined errors. These errors must be determined by another model - the model of the receiver, which determines what data and with what accuracy it is presented is important for the receiver, and what is acceptable to throw away. The compression ratio is the main characteristic of the compression algorithm, expressing the main application quality. It is defined as the ratio of the size of uncompressed data to compressed data, that is: where k- compression ratio, S o is the size of the uncompressed data, and S c - compressed size. Thus, the higher the compression ratio, the better the algorithm. It should be noted: Situation with k < 1 вполне возможна при сжатии. Невозможно получить алгоритм сжатия без потерь, который при любых данных образовывал бы на выходе данные меньшей или равной длины. Обоснование этого факта заключается в том, что количество различных сообщений длиной n Pattern:E:bit is exactly 2 n. Then the number of different messages with length less than or equal to n(if there is at least one message of smaller length) will be less than 2 n. This means that it is impossible to unambiguously map all original messages to a compressed one: either some original messages will not have a compressed representation, or several original messages will have the same compressed representation, which means they cannot be distinguished. The compression ratio can be either a constant ratio (some algorithms for compressing sound, images, etc., such as A-law, μ-law, ADPCM) or variable. In the second case, it can be defined either for a specific message, or evaluated according to some criteria: or some other. In this case, the lossy compression ratio strongly depends on the permissible compression error or its quality, which usually acts as an algorithm parameter. The main criterion for distinguishing between compression algorithms is the presence or absence of losses described above. In general, lossless compression algorithms are universal in the sense that they can be applied to any type of data, while the use of lossy compression must be justified. Some types of data do not accept any kind of loss: However, lossy compression allows you to achieve much higher compression ratios by discarding insignificant information that is poorly compressed. So, for example, the FLAC lossless audio compression algorithm, in most cases, allows you to compress the sound by 1.5-2.5 times, while the Vorbis lossy algorithm, depending on the quality parameter set, can compress up to 15 times while maintaining acceptable quality sound. Different algorithms may require different amounts of computing system resources on which the following are executed: In general, these requirements depend on the complexity and “intelligence” of the algorithm. According to the general trend, the better and more versatile the algorithm, the greater the demands on the machine it makes. However, in specific cases, simple and compact algorithms may work better. System requirements determine their consumer qualities: the less demanding the algorithm, the simpler, and therefore more compact, reliable and cheap system it can work. Since compression and decompression algorithms work in pairs, the ratio of system requirements to them also matters. Often, by complicating one algorithm, another can be greatly simplified. Thus we can have three options: The compression algorithm is much more demanding on resources than the decompression algorithm. This is the most common ratio, and it is applicable mainly in cases where once compressed data will be used repeatedly. Examples include digital audio and video players. Compression and decompression algorithms have approximately equal requirements. The most acceptable option for a communication line, when compression and expansion occurs once at its two ends. For example, it can be telephony. The compression algorithm is significantly less demanding than the decompression algorithm. Pretty exotic. It can be used in cases where the transmitter is an ultra-portable device, where the amount of available resources is very critical, for example, a spacecraft or a large distributed network of sensors, or it can be decompression data that is required in a very small percentage of cases, for example, video surveillance cameras. Wikimedia Foundation. 2010 . information compression- compaction of information - [L.G. Sumenko. English Russian Dictionary of Information Technologies. M .: GP TsNIIS, 2003.] Topics information technology in general Synonyms information compaction EN information reduction ... INFORMATION COMPRESSION- (data compression) representation of information (data) with a smaller number of bits compared to the original. Based on the elimination of redundancy. Distinguish S. and. without loss of information and with the loss of part of the information that is insignificant for the tasks being solved. TO… … Encyclopedic Dictionary of Psychology and Pedagogy adaptive lossless information compression- - [L.G. Sumenko. English Russian Dictionary of Information Technologies. M.: GP TsNIIS, 2003.] Topics information technology in general EN adaptive lossless data compressionALDC ... Technical Translator's Handbook compression/compression of information- - [L.G. Sumenko. English Russian Dictionary of Information Technologies. M .: GP TsNIIS, 2003.] Topics information technology in general EN compaction ... Technical Translator's Handbook digital information compression- - [L.G. Sumenko. English Russian Dictionary of Information Technologies. M.: GP TsNIIS, 2003.] Topics information technology in general EN compression ... Technical Translator's Handbook Sound is a simple wave, and a digital signal is a representation of that wave. This is achieved by storing the amplitude of the analog signal many times within one second. For example, in an ordinary CD, the signal is stored 44100 times in ... ... Wikipedia A process that reduces data volume by reducing data redundancy. Data compression is related to the compact arrangement of data chunks of a standard size. There are compressions with loss and without loss of information. In English: Data… … Financial vocabulary compression of digital cartographic information- Processing of digital cartographic information in order to reduce its volume, including the elimination of redundancy within the required accuracy of its presentation. [GOST 28441 99] Topics digital cartography Generalizing terms methods and technologies ... ... Technical Translator's Handbook Any substance under the influence of external pressure can be compressed, that is, it will change its volume to one degree or another. So, gases with increasing pressure can very significantly reduce their volume. The liquid is subject to change in volume with a change in external pressure to a lesser extent. The compressibility of solids is even less. Compressibility reflects the dependence of the physical properties of a substance on the distances between its molecules (atoms). Compressibility is characterized by the compression ratio (Same: compressibility ratio, all-round compression ratio, volumetric elastic expansion ratio). DEFINITION Compression ratio is a physical quantity equal to the relative change in volume divided by the change in pressure, which causes a change in the volume of a substance. There are various designations for the compression ratio, most often these are letters or. In the form of a formula, we write the compression ratio as: where the minus sign reflects the fact that an increase in pressure leads to a decrease in volume and vice versa. In differential form, the coefficient is defined as: The volume is related to the density of the substance, therefore, for the processes of pressure change at a constant mass, we can write: The value of the compression ratio depends on the nature of the substance, its temperature and pressure. In addition to all of the above, the compression ratio depends on the type of process in which the pressure change occurs. So, in an isothermal process, the compression ratio differs from the compression ratio in an adiabatic process. The isothermal compression ratio is defined as: where is the partial derivative at T=const. The adiabatic compression ratio can be found as: where is the partial derivative at constant entropy (S). For solids, the isothermal and adiabatic compressibility coefficients differ very little and this difference is often neglected. There is a relationship between the adiabatic and isothermal compressibility coefficients, which is reflected by the equation: where and are the heat capacities at constant volume and pressure. The basic unit of measure for the compressibility factor in the SI system is: EXAMPLE 1 In accordance with the definition of the compression ratio, we write: Since the change in the side of the cube caused by pressure is , then the volume of the cube after compression () can be represented as: Therefore, we write the relative change in volume as: is small, so we assume that We substitute the relative volume change from (1.4) into formula (1.1), we have:Information compression principles

Characteristics of compression algorithms and applicability

Compression ratio

Acceptance of losses

System requirements of algorithms

see also

See what "Information Compression" is in other dictionaries:

![]()

![]()

![]()

![]()

![]()

Aspect ratio units

![]()

Examples of problem solving

Exercise

Let a cube of solid matter with side equal experience all-round pressure. The side of the cube is reduced by . Express the coefficient of compression of the cube if the pressure exerted on it changes with respect to the initial one by

Decision

Let's make a drawing.

![]()

![]() are equal to zero, then we can assume that:

are equal to zero, then we can assume that:![]()

Answer